Leveraging Large Language Models for Enhancing the Understandability of Generated Unit Tests

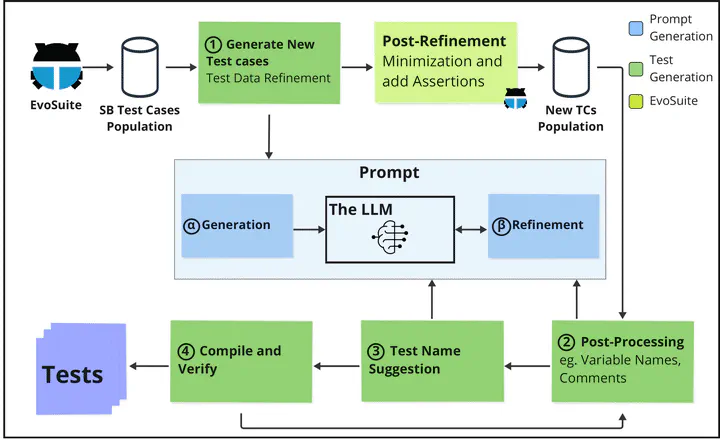

UTGen Architecture

UTGen ArchitectureAbstract

Automated unit test generators, particularly search- based software testing tools like EvoSuite, are capable of generat- ing tests with high coverage. Although these generators alleviate the burden of writing unit tests, they often pose challenges for software engineers in terms of understanding the generated tests. To address this, we introduce UTGen, which combines search- based software testing and large language models to enhance the understandability of automatically generated test cases. We achieve this enhancement through contextualizing test data, improving identifier naming, and adding descriptive comments. Through a controlled experiment with 32 participants from both academia and industry, we investigate how the understand- ability of unit tests affects a software engineer’s ability to perform bug-fixing tasks. We selected bug-fixing to simulate a real-world scenario that emphasizes the importance of understandable test cases. We observe that participants working on assignments with UTGen test cases fix up to 33% more bugs and use up to 20% less time when compared to baseline test cases. From the post-test questionnaire, we gathered that participants found that enhanced test names, test data, and variable names improved their bug- fixing process.

Type

Publication

International Conference on Software Engineering (ICSE)

Click the Cite button above to demo the feature to enable visitors to import publication metadata into their reference management software.